What are some environmental factors that can impact the performance of a machine vision system, and how can they be mitigated?

Machine vision systems are used in various settings all around the world, highlighting the need for reliable solutions in different environments.

Ambient lighting conditions in a factory can have the biggest environmental impact on machine vision performance. If there are windows in a factory, sun and shadows moving through the day and season can affect how a system performs. We can sometimes see issues if a factory gets new overhead lighting or if a machine gets moved to a new space.

A well-designed system should be able to handle ambient light changes without changing measurement outputs. The best way to do this is to have strong, repeatable machine vision lighting. If the machine vision lighting is good quality, it should overpower the ambient effects. Strobed lighting combined with short camera exposure times normally allow for a crisp, repeatable image regardless of ambient light.

Dust, water, and manufacturing debris can adversely impact machine vision systems. Thus, employing enclosures with appropriate IP ratings for dust and water resistance is beneficial. It is also crucial to design both the machine and its surrounding area to protect the vision system from environmental hazards. Additionally, adhering to a stringent preventative maintenance and cleaning schedule plays a vital role in ensuring the long-term accuracy and reliability of the vision system.

How does the choice of imaging sensor affect the overall performance of a machine vision system, and what are some key sensor specifications to consider?

The primary specification considered in most applications is sensor resolution. Having sufficient pixels across an image is crucial for the vision software to accurately resolve the feature or object being inspected. Sensor resolution is the key determinant of a vision system's measurement precision and its ability to detect small objects. Once the necessary sensor resolution is established, other factors can then be taken into consideration.

Sensors are manufactured with different shutter options: Global and Rolling. A Rolling shutter sensor will scan the image from the top row of pixels down to the bottom row. This is done fairly quickly, and the effect is not noticeable on stationary objects.

However, for moving objects, a Rolling shutter sensor will introduce distortion into the image as the top and bottom of the image become misaligned. A Global shutter sensor is generally preferred because the shutter activates all pixels on the sensor simultaneously. The image is a true freeze-frame of the object without any distortion across the sensor.

The sensor format significantly influences the optics that can be paired and the size of the field of view. There are many trade-offs when considering sensor format. Small format sensors (2/3” format and smaller) can use more widely available and less-expensive lenses. Large format sensors (1” format and larger) allow for higher resolutions and broader fields of view, but they require higher-end optics to ensure that they are achieving optimum performance.

Other factors, such as aspect ratios and quantum efficiencies, can also be considered, but in most cases, the above factors will narrow down the available options and drive the choice.

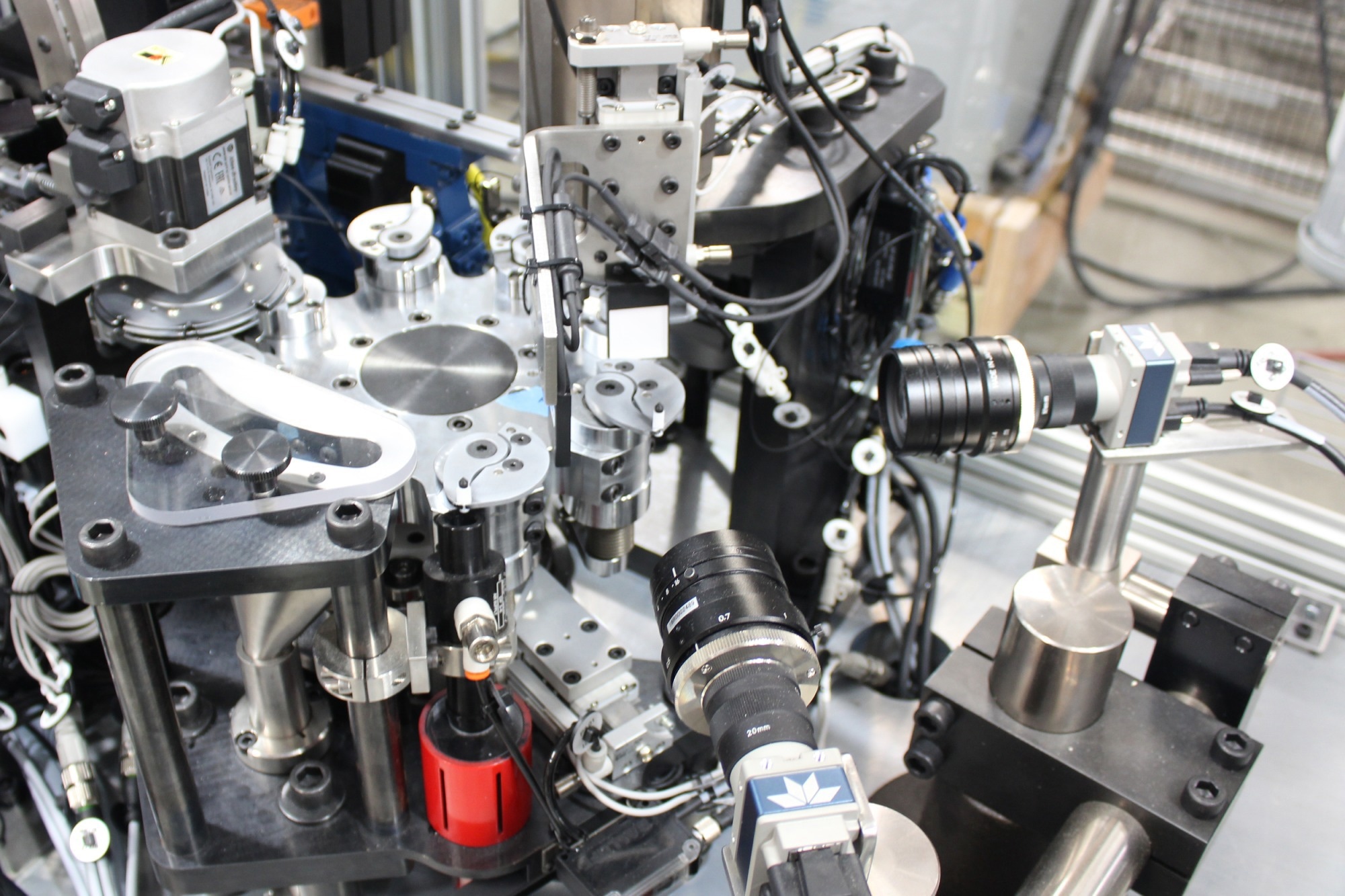

Can you explain the importance of optics in a machine vision system and what factors should be considered when selecting a lens?

Selecting the right lens is a critical aspect of vision system design, as it determines the system's fundamental physical dimensions. The working distance, which is the required mounting distance between the camera and the object, along with the camera's field of view, are both established based on the lens selected. There is an extensive range of standard and specialty lenses available to optimize image utility. Examples of optics frequently employed in machine vision include:

- C-mount (and CS-mount) variable focus lenses are probably the most common. They are available in a broad spectrum of focal lengths, enabling users to tailor the system for various fields of view and working distances. Typically, these lenses feature an adjustable aperture, allowing for fine-tuning of light intake.

- Telecentric lenses, while larger and more costly, are essential for highly precise measurements. They maintain consistent object size in the image, regardless of the object's proximity to the camera. This characteristic is vital in applications where precision is paramount, as a telecentric lens ensures accurate measurement of feature sizes, even if there is some variation in the working distance.

- 360-degree lenses are special lenses that can be used to look at the entire outside or inside surface of a cylinder with a single camera.

- Macro Lenses and Microscope lenses can be used for very small objects where the field of view needs to be smaller than the sensor area.

Image Credit: Teledyne Dalsa

What role does lighting play in a machine vision system, and what are some different types of lighting options?

Lighting is a very critical component of a vision system, and the right choice of lighting can significantly impact the effectiveness of a vision system in capturing essential information from the target. Machine vision lighting is designed to give the camera a reproducible image that will not change over millions of cycles, ensuring the long-term stability and accuracy of the system.

Additionally, machine vision lights have a wide variety of shapes, designs and illumination angles, which can be used to selectively illuminate features of interest on the parts being inspected. For example,

- Low-angle lights can illuminate bumps and scratches while dampening surface reflections.

- On-axis lights can provide lighting that is parallel to the lens and are good for illuminating inside holes or cavities.

- Backlights provide a silhouette of an object and allow for easy and accurate measurement of external dimensions.

- Different colors or wavelengths can be used to accentuate contrast and details.

How can filters be used in a vision system to enhance image quality, and what are some common types of filters?

Filters in machine vision systems are employed to selectively block or attenuate various wavelengths of light before they reach the camera. The bandpass filter, which only transmits a narrow spectrum of light wavelengths, is the most commonly used type. For instance, a red bandpass filter allows only a specific range of red light (approximately 615-660nm) to pass through, blocking other wavelengths.

This feature is particularly advantageous when used in conjunction with a machine vision light that emits red light. The filter effectively eliminates all ambient and extraneous light, enabling the vision system to capture only the structured light it is designed to use. This greatly enhances repeatability and stability under varying environmental conditions.

Additionally, in systems with multiple cameras and light sources, employing different wavelength filters and light combinations can prevent cross-interference between the various light sources.

The Polarizing filter is another valuable tool in machine vision. It specifically reduces light reflections entering the lens from certain angles. Typically, machine vision polarizing filters are adjustable, allowing the user to rotate the filter and select the angle of reflection to be blocked. This is particularly effective in reducing glare or reflections.

When combined with a polarized light source, the polarizing filter's utility is enhanced. By aligning the polarization angle of both the light source and the filter, glare and reflections can be significantly minimized. This setup is frequently employed in applications such as barcode reading, especially when the code is printed on a reflective surface.

What is the significance of frame rate in machine vision, and how does it impact the quality of captured images?

In machine vision, frame rate, often referred to as ‘fps,’ measures how many images can be captured and processed per second. A system fps is based on the time it takes a sensor to capture an image plus the time it takes for the vision system to analyze that image plus the amount of time required for communications and other overhead. For a well-designed vision system, the frame rate should not affect the quality of captured images (up to the maximum rate of the sensor used).

Image Credit: Teledyne Dalsa

In terms of hardware and processing power, what considerations should be made for machine vision systems involving deep learning and artificial intelligence?

When discussing deep learning and AI for machine vision hardware, two different modes need to be defined. A system using AI needs a Training mode to build the AI model, and it needs an Inference mode to run the AI model on the production line. The hardware required for these two modes can be quite different.

Training AI models typically demands more advanced hardware compared to traditional machine vision requirements. It is usually recommended to use a high-end graphics card (GPU) and a powerful PC, or alternatively, access high-end cloud computing resources.

Implementing either of these options in a factory setting can be challenging, leading to a growing trend towards optimizing training processes to reduce resource demands. However, for complex tasks that necessitate high accuracy, the need for a high-end GPU remains essential.

The hardware requirements for AI model inference are considerably less demanding than those for training. While a GPU was necessary for both training and inference just a few years ago, advancements in the field have enabled AI inference to be conducted on more modest hardware. Nowadays, inference can be performed on a CPU, and many AI models are capable of running 'at the edge' on relatively low-power smart cameras and embedded devices.

Recently, there has been a trend towards simplifying AI processes, leading to the emergence of a new category known as Edge AI. In this approach, both training and inference are conducted by a smart camera or an embedded device directly on the production line. This innovation is made possible by advancements in AI model design, including techniques like pruning and quantization.

Models are initially pre-trained on general data and then deployed to edge devices, where they are fine-tuned to specific conditions on the production line. For customers, this process is seamless, resulting in the ability to set up an inspection system right on the production line with just a few example images, eliminating the need for additional costly hardware.

How can a vision system's noise and gain settings be optimized to achieve the best image quality?

It is important to find the right balance with noise and gain settings to meet the specific application and lighting conditions. When a high frame rate is essential and short exposure cannot be avoided, the camera’s gain can be used to compensate for the reduced brightness.

Increasing the gain boosts the sensor’s sensitivity, which enables the vision system to capture a brighter image with less light. However, increasing the gain will also increase noise in the image, and increasing the gain should be used as the last option.

What is bit depth, and why is it important in machine vision? How does it differ from the dynamic range?

Bit depth describes the count of increments of brightness the pixels have in an image. Using monochrome 8-bit as an example, each pixel can have 256 values ranging from 0 (pure black) to 255 (pure white).

Generally, higher bit depth is advantageous in machine vision. It allows for a greater number of increments and finer gradation between levels of brightness, enhancing the system's sensitivity to subtle changes. For instance, a monochrome 16-bit image offers 65,536 increments per pixel. However, the downside of using a higher bit depth is that it results in larger image file sizes and can reduce the frames per second (fps) at which the system operates.

Dynamic range refers to the contrast between the brightest and darkest areas of an image. In machine vision, the appropriateness of a specific dynamic range depends on the application; there is no universally 'better' or 'worse' range. The key consideration is ensuring that the image is neither underexposed nor overexposed for the intended use.

Could you elaborate on the role of software in a vision system and how it interacts with hardware components?

The performance of a camera in a vision system is heavily influenced by the software it utilizes. The synergy between the hardware and software components is vital for attaining peak performance in a vision system. It is the software's capacity to process and interpret the image data captured by the camera that truly enables the vision system to execute its designated functions efficiently.

Multiple software layers are integral to the system's functionality. For the system to operate effectively, these layers must work together in unison:

- Firmware: Operates on the sensor and camera to control image capture.

- Acquisition Layer: Manages the transfer of images from the camera to a PC or from the sensor to usable memory.

- Machine Vision Software: Interprets and analyzes the captured images.

- Communications Layer: Transmits results or measurements to factory control systems.

- User Interface Layer: Facilitates communication and displays information to operators and technicians.

What are some key functions of image acquisition and control software in a machine vision system?

The primary role of image acquisition and control software is to configure the camera's image-capturing process. Users typically use this software to set exposure times, adjust gains or color corrections, and perform flat-field or dead pixel corrections.

It also allows for the customization of advanced camera features and settings. Additionally, users can determine the camera's triggering mechanism, whether it is through an external sensor, software control, or a timer. The software also facilitates the setup of connections to machine vision lights and the timing of strobe outputs.

This software can either be a standalone application or integrated into a more comprehensive, user-friendly UI within the vision system. Once configured, the acquisition software usually operates in the background, automatically managing the capture and transfer of images.

Image Credit: Teledyne Dalsa

What innovative or unique approaches have you seen in machine vision applications that deviate from the traditional methods?

The hot topic is AI and Deep learning. With AI, we have been able to solve new machine vision problems that were previously impossible with traditional tools. In applications where there is a lot of environmental noise introduced into the image, AI excels.

AI is very good at ignoring variations in applications where objects can be variably dirty or wet or covered with water droplets. AI models can also be impressively accurate for counting and sorting overlapping or partially hidden objects.

The field of 3D machine vision applications is expanding, with a variety of laser scanners now available that offer three-dimensional representations to vision systems. 3D technology is particularly intriguing because it enables the measurement of an object's height and, as it does not rely on lenses and optics in the same way traditional cameras do, it can often detect defects that would be invisible to a conventional camera.

By integrating 3D and line scan technologies, it is feasible to perform a comprehensive, data-rich scan of an object, allowing for the detection of a wide range of defects in a single pass.

With the increase in online shopping, warehousing and logistics are turning to AI, deep learning, and robotics to heighten efficiency and ensure safety. Autonomous Mobile Robots (AMRs) are being used to optimize the entire supply chain. From transporting and sorting goods within the warehouse to supporting picking processes to optimizing productivity and accuracy in order fulfillment, AMRs allow companies to remain competitive and adapt to new market needs.

Machine vision is increasingly being utilized in precision agriculture for various applications, including assessing crop seed quality, detecting water and nutrient deficiencies, identifying pest stresses, evaluating crop quality, and locating invasive species.

Machine vision technology can account for the variability in light absorption characteristics of crops, vegetation, foods, and agricultural products and can find a suitable solution for each specific problem.

About Szymon

Szymon Charwaski is a Product Manager for Teledyne DALSA. He has 15 years of experience in computer vision, automation and product design, and has recently been focusing on applying AI and deep learning to solve challenging real-time industrial inspection applications.

This information has been sourced, reviewed and adapted from materials provided by Teledyne DALSA.

For more information on this source, please visit Teledyne DALSA.

Disclaimer: The views expressed here are those of the interviewee and do not necessarily represent the views of AZoM.com Limited (T/A) AZoNetwork, the owner and operator of this website. This disclaimer forms part of the Terms and Conditions of use of this website.