In a recent article published in the journal Nature Communications, researchers investigated the application of large language models (LLMs) to automate atomic force microscopy (AFM) experiments, a complex technique pivotal in materials science and nanotechnology. The study introduced a comprehensive framework named Artificially Intelligent Lab Assistant (AILA), designed to leverage state-of-the-art LLMs for orchestrating AFM operations, with a particular emphasis on optical measurements such as high-resolution imaging and surface characterization.

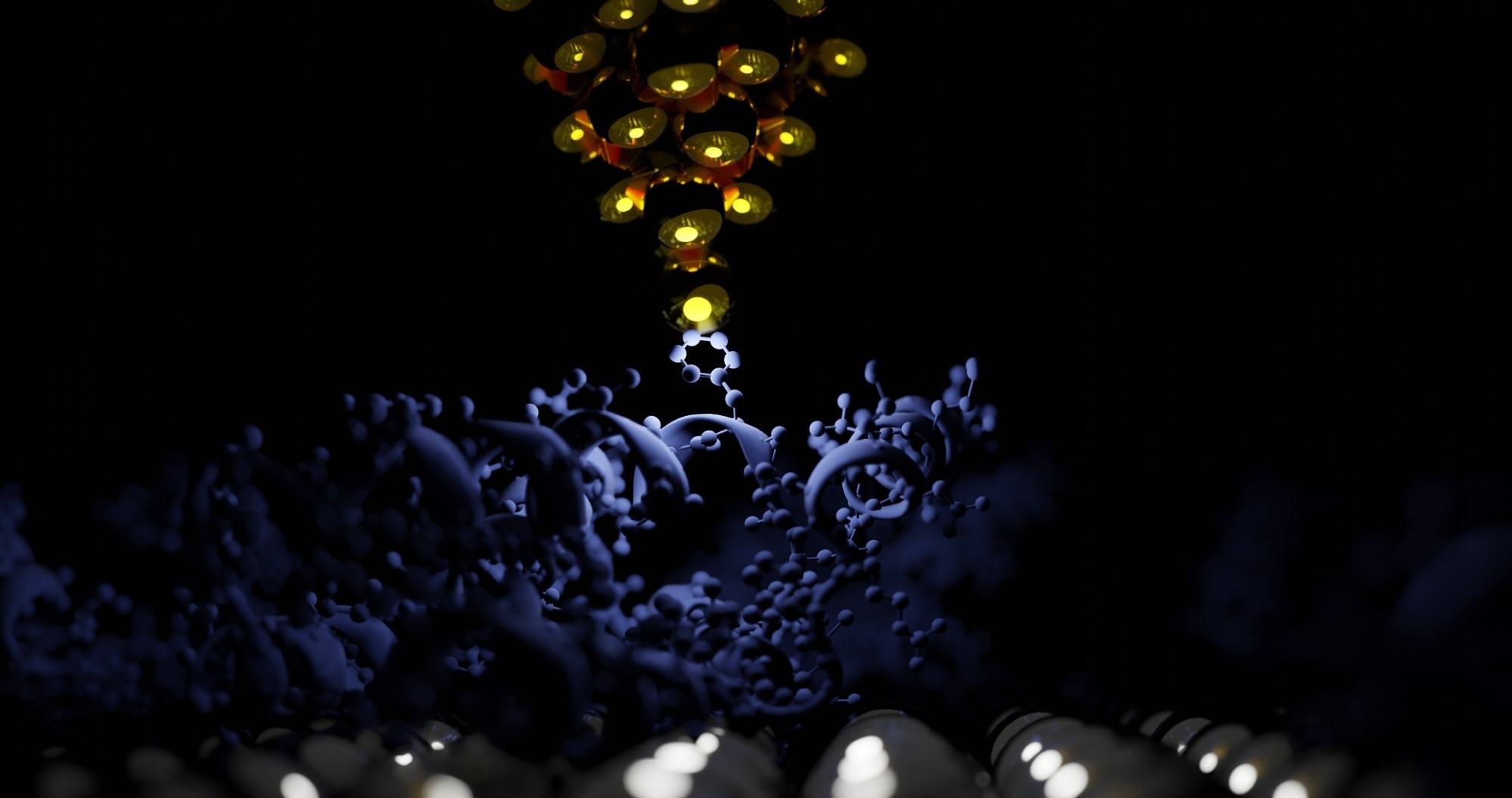

Image Credit: sanjaya viraj bandara/Shutterstock.com

Background

Autonomous laboratory systems have made significant strides with the integration of AI, especially in automating intricate analytical and imaging workflows. These processes typically involve a coordinated sequence of tasks, such as probe calibration, surface scanning, focusing, and surface characterization, each relying on image quality and surface features. Successfully automating this range of tasks requires systems capable of reasoning across multiple modules and adapting to changing experimental cond.

Previous efforts to employ AI in microscopy have typically focused on specific operational facets like image enhancement, illumination optimization, or surface roughness quantification. However, these have largely been limited to specialized, narrowly-defined tasks rather than encompassing the full experimental workflow. Recent advancements with large language models offer a promising avenue for creating more adaptable and generalizable automation systems.

Download the PDF of this page

The Current Study

The core of the methodology involves the deployment of a multi-agent LLM system, where each agent is assigned specialized roles, such as controlling optical components, performing surface analysis, or managing experimental parameters. The agents communicate using a structured protocol that facilitates task decomposition, resource allocation, and decision-making. To operationalize this structure, the researchers developed a set of prompts, context management protocols, and safety checks embedded within the framework.

The experimental setup encompasses a variety of optical AFM tasks, such as high-resolution imaging of surface features, calibration of optical parameters like focus and illumination, and surface roughness measurements. The framework interfaces with hardware through dedicated APIs, allowing the agents to send commands to optical microscopes, adjust focus and light intensity, and acquire images autonomously. Optical tasks are decomposed into sub-tasks such as focusing on surface features, capturing multi-scale images, and optimizing illumination conditions.

The benchmarking suite, AFMBench, presents a series of 100 real-world tasks that span the entire workflow of optical AFM experiments. These tasks range from routine image acquisition to complex multi-step procedures like graphene layer counting, and indenter detection, requiring precise coordination of multiple tools and decision-making capabilities. Critical performance metrics include task success rate, tool coordination efficiency, safety adherence, and robustness to prompt variations.

Results and Discussion

The experimental results demonstrate that LLMs can effectively manage a broad spectrum of optical AFM tasks. In high-level terms, multi-agent approaches markedly outperform their single-agent counterparts, particularly on complex multi-step workflows. This aligns with prior observations that specialized agents, when working collaboratively, can handle intricate experimental sequences more reliably and efficiently. For optical experiments, this translates into better surface feature detection, more accurate focus adjustment, and improved surface roughness measurements, all carried out autonomously. GPT-4o achieved 65% overall on AFMBench; in a subset comparison, the multi-agent setup reached 70% vs 58% for single-agent.

A critical insight from the study pertains to the challenge of prompt sensitivity and the ‘sleepwalking’ phenomenon, where AI agents tend to operate outside the intended operational boundaries after minor prompt variations. This behavior is especially problematic in optics, where safety constraints involve laser safety, focus limits, and hardware integrity. The researchers identified that multi-agent architectures reduce the severity of such deviations but do not eliminate them entirely. Accordingly, integrating safety checks and formal verification protocols into the framework is highlighted as a priority for future development.

Another key finding concerns the discrepancy between question-answering prowess and experimental control capabilities of existing LLMs. Notably, models that excel in materials science questions rate poorly in physical experiment management, indicating that domain knowledge does not necessarily translate into operational competence. For optical experiments, this emphasizes the importance of specialized modules and real-time feedback mechanisms, which current models lack inherently.

Conclusion

This work marks a notable step forward in developing autonomous optical systems by integrating large language models within a modular, multi-agent framework. The resulting AILA system demonstrates the ability to manage end-to-end optical AFM workflows, from surface imaging to in-depth surface analysis, with success rates that exceed previous question-answering benchmarks. Evaluation using the AFMBench benchmark suite highlights that multi-agent setups, where each agent takes on a specialized role, consistently outperform single-agent systems, particularly when navigating complex, multi-tool tasks central to optical experiments. Looking ahead, priorities include strengthening safety mechanisms, incorporating domain-specific tools, and improving resilience to prompt variability, all key to enabling real-world deployment in optics and related research areas.

Journal Reference

Mandal I., Soni J., et al. (2025). Evaluating large language model agents for automation of atomic force microscopy. Nature Communications 16, 9104. DOI: 10.1038/s41467-025-64105-7, https://www.nature.com/articles/s41467-025-64105-7