AI vision, such as image processing with artificial intelligence, is a widely discussed topic. Yet, in several areas, such as industrial applications, the promise of advanced, new technology has not yet fully landed, so long-term empirical values are lacking.

Image Credit: IDS Imaging Development Systems GmbH

While there are a number of embedded vision systems available on the market that enable the use of AI in industrial conditions, a significant number of facility operators are still reluctant to purchase one of these platforms and upgrade their applications.

AI has already demonstrated innovative possibilities where rule-based image processing has run out of options, generally lacking a solution. So what is standing in the way of new technology from spreading even faster?

One of the key factors holding the technology back is the fact that everyone is already able to design their own AI-based image processing applications, even without expert knowledge in artificial intelligence and the application programming that is necessary.

So, while artificial intelligence can expedite a plethora of work processes and minimize sources of error, edge computing also means it is possible to dispense with expensive industrial computers and the complex infrastructure that would be needed for high-speed image data transmission.

New and Different

However, AI or machine learning (ML) functions in widely different ways in comparison to conventional, rule-based image processing. This also influences the approach and handling of image processing tasks.

The quality of results is no longer the outcome of a manually developed program code by an image processing expert, as was the case, but it is now determined by the learning process of the neural networks used with appropriate image data.

To put it another way, the object features required for inspection are no longer pre-established by predefined rules, but the AI must be taught to recognize them during the training process. And the more diverse the training data, the more likely it is that the AI/ML algorithms are able to recognize the features particularly relevant later in operation.

What sounds, in effect, simple can also lead to achieving the required objective with sufficient expertise and experience.

Without possessing a skilled eye for the appropriate image data, errors will also occur in this application. This means that the main skills required for working with machine learning methods differ from those required for rule-based image processing.

However, not everyone has the time or manpower to delve into the subject from scratch to acquire a set of new key skills for working with machine learning methods.

Unfortunately, that is the main issue with new technology – it cannot be used productively immediately. If they deliver excellent results with minimal effort but cannot be easily reproduced, it becomes hard to believe and place trust in such a system.

Complex and Misunderstood

Rational thinking would suggest that one would like to know how this AI vision works. But without recognizable explanations that can be easily grasped, results are difficult to evaluate.

Gaining confidence in a new technology is usually built on skills and experience that sometimes have to be acquired over a prolonged period of time before knowing exactly what a technology can do, how it works, how to apply it and also how to manage it properly.

Making matters even more complicated is the fact that the AI vision goes up against an established system, for which creating the right environmental conditions in recent years has been executed through an application of knowledge, training, documentation, software, hardware and development environments.

AI, on the other hand, still appears to be very raw and puristic, and in spite of the well-known benefits and the high levels of accuracy that can be achieved with AI vision, it is generally difficult to identify errors. The lack of clear insight into the inner workings or inexplicable results are the flip side of the coin, which impedes the expansion of the algorithms.

(Not) A Black Box

Neural networks are often erroneously perceived as a black box whose decisions are not intelligible: “Although DL models are undoubtedly complex, they are not black boxes. In fact, it would be more accurate to call them glass boxes, because we can literally look inside and see what each component is doing.” [Quote from “The black box metaphor in machine learning”].

Inference decisions of neural networks are not predicated on the traditional comprehensible set of rules, and the complex interactions of their artificial neurons may initially be difficult for humans to understand, but they are still the clear result of a mathematical system and, therefore, reproducible and analyzable.

What is still missing are the appropriate tools to support proper evaluation. There is plenty of room for improvement in this area of AI. This also demonstrates how well the various AI systems on the market can help users in their endeavors.

Software Makes AI Explainable

For these reasons, IDS Imaging Development GmbH is working hard, researching in this field along with other institutes and universities to help develop these exact tools.

Already, the IDS NXT inference camera system is one such result of this cooperation. Statistical analyses through the application of the so-called confusion matrix make it possible to establish and understand a trained neural network’s overall quality.

After the training process, validation of the network can be carried out using a previously determined series of images with results that are already known. Both the expected results and the actual results determined by inference are shown by comparison in a table.

This clarifies how often the test objects were recognized correctly or incorrectly for each of the trained object classes. From the hit rates generated, an overall quality of the trained algorithm can then be offered.

Furthermore, the matrix indicates clearly where the recognition accuracy could still be inappropriate for productive use. However, it does not offer a precise reasoning for this outcome.

Figure 1. This confusion matrix of a CNN classifying screws shows where the identification quality can be improved by retraining with more images. Image Credit: IDS Imaging Development Systems GmbH

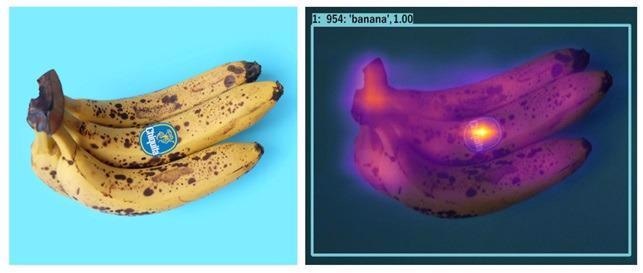

This is where the attention map becomes useful, displaying a kind of heat map that highlights the areas or image contents that the neural network pays most attention to and thus influences the decisions.

Throughout the training process in IDS NXT lighthouse, the creation of this visualization form is activated in relation to the decision paths generated throughout the training; this allows the network to produce such a heat map from each image while the analysis runs.

This means critical or inexplicable decisions that the AI makes are easier to understand, ultimately facilitating a better acceptance of neural networks in industrial environments.

It can also be applied to detect and prevent data biases (see figure “attention maps”), which would cause a neural network to make erroneous decisions during inference. This is due to the fact that a neural network is not able to become ‘smart’ in isolation.

Poor input leads to poor output quality. To recognize patterns and make predictions, an AI system is reliant on receiving good data from which it can learn “correct behavior”.

If an AI is constructed under laboratory conditions using data that is not particularly representative of subsequent applications, or worse, if the patterns in the data impose biases, the system will absorb and apply those biases.

Figure 2. This heat map shows a classic data bias. The heat map visualizes a high attention on the Chiquita label of the banana and thus a good example of a data bias. Through false or under representative training images of bananas, the CNN used has obviously learned that this Chiquita label always suggests a banana. Image Credit: IDS Imaging Development Systems GmbH

Software tools allow users to trace the behavior and results of the AI vision and directly relate this back to weaknesses within the training data set and adjust them as necessary in a targeted manner. This makes AI more comprehensible and explainable for everyone as it is essentially just statistics and mathematics.

Understanding the mathematics and following it in the algorithm is not always easy, but with confusion matrix and heat maps, there are tools that make decisions and reasons for decisions visible and thus graspable.

We are Only at the Beginning

When used correctly, AI vision has the potential to enhance a number of vision processes. But hardware alone is not sufficient to have the industry accept AI across the board. Manufacturers are often challenged by sharing their expertise in the form of user-friendly software and built-in processes which supports the users.

AI still has a long way to go to catch up to the best practices, which are the result of years of work and application to build up a loyal customer base with a lot of documentation, knowledge transfer and many software tools. The good news is that such AI systems and supportive tools are already in development.

Standards and certifications are also being drafted to further increase acceptance and make explaining AI straightforward enough to take to the big table. IDS is facilitating this.

With IDS NXT, an integrated AI system is now available that can be quickly and easily applied as an industrial tool by any user group with a sophisticated and user-friendly software environment - even without comprehensive knowledge of image processing, machine learning or application programming.

This information has been sourced, reviewed and adapted from materials provided by IDS Imaging Development Systems GmbH.

For more information on this source, please visit IDS Imaging Development Systems GmbH.