An interview with Darran Milne, outlining the technology and applications of VividQ's holographic displays, conducted at Photonics West 2019.

Please give an introduction to holographic displays.

Holographic display is a method of displaying visual content that is only now making early steps into the market. It is based on a totally different physical principal to what you usually find in the world today. When you look at a normal display you are basically looking at a big array of pixels, where each pixel gives some colour depending on a filter that is put in front of it.

You see an image but that is not really how we see the real world. When you look at the world around you, you are not seeing a 2-D pixel array of colours, you are seeing light reflecting off surfaces and that light adding up in complex ways to give interference patterns that your eye then interprets as an image.

So say you want to create the perfect display system. You would need to mimic the physical process that natures uses in order to relay visual information to you. However, rather than relying on actually reflecting light off things, we can compute the light patterns that are reflected from objects, and represent them on a display device. We have to compute the entire wave-front reflected from a given object so that it encodes all the visual information, including all the object’s depth information. This complex pattern is known as the hologram. We reflect light off the hologram to your eye and you see a 3D holographic image.

This contrasts with what is widely described in the ARVR industry as hologram - they often use it to mean any virtual object placed in a scene. When we say hologram, we mean in the full academic sense of the word - creating a full 3-D object using only the interference properties of light.

VividQ started off in 2017 with a very rough prototype. We managed to get some initial investment and for the last two years we have been building that into product. It turns out it is very difficult to sell just algorithms to people so we have actually had to make the entire software pipeline ourselves - everything from how you capture your 3-D data from 3-D sources like game engines and 3-D cameras, through to how you output that onto a display device that can support holographic display.

Now, an optical engineer or any researcher can take our entire software package, drop it into their system and have an end-to-end solution that allows them to connect their source data with the display and create genuine 3-D images.

Please give a brief overview of your talk at Photonics West 2019.

The talk was to show some of the recent results we have had in making that pipeline from content to display real-time. One of the biggest barriers to holographic display has been the massive amount of computing that is required in order to achieve this.

If you want to create a 3-D pattern what you must do is simulate light propagation from every point of a given object to every point where I might want to look at it. With a high-resolution object that has tens of millions of points you can imagine that that is a lot of wave propagation calculations. Historically this has taken minutes or even hours, and even in very recent publications it has still taken several hundred milliseconds to view even a single frame.

Our first big innovation is that we have taken it down to tens of milliseconds so that we now do the entire process in real-time The talk was based on how we do that - how we make this pipeline so efficient and the algorithm so fast that you can literally play a holographic computer game. One of the demos we showed was how we could hook our holographic system into the Skyrim engine, and showing that are algorithms are so fast that you can now play holographic Skyrim on our prototype holographic headset.

How has VividQ software addressed the problem of 3D data distribution and visualisation?

We have not addressed distribution so much. As part of the package we do have a compression module which allows us to shrink data coming out of 3D data sources to a manageable size. The type of data we like is in the form of point clouds which means we collect a massive stack of 3-D vectors from a particular scene. This gives you all the depth information you need, however, if you do that for a sufficiently complex scene you suddenly end up with many gigabytes of data. This is why we designed and built a compression tool that basically takes that and shrinks it down to maybe one or two percent of its original size so that we can distribute it faster.

That is not really the core of the business or tech, but rather just an enabling tool that allows for efficient streaming of data to holographic displays.

Please outline the technology behind the software.

There are several key components that are required for holographic display. You cannot just use a normal display like what you would find on an iPad or TV. The way we generate the hologram is by reflecting light off a display that is showing a particular pattern of phase.

This phase pattern is what we call a hologram - we reflect light off that and then the wave-front of the light is modulated by that pattern which appears as an object when you look at the display. Because of that we need a type of display known as a spatial light modulator. This enables you to create that phase mask and to update it as fast as you like so you can have a moving image.

Our software is currently built on top of an Nvidia stack so we rely heavily on GPUs. In fact our underlying code is written in very low level CUDA C – NVidia’s GPU language - and then on top of that it has a C++ layer and then a C API so that the developers can easily connect and code against it to hook up their SLM or into their content.

In terms of the other technologies we support, as I said we talked to Unity, Skyrim, and we are adding support for Unreal right now. We are compatible with a host of 3-D cameras like Stereolab Zed cameras, the PMD Picoflexxf and a few others. We have mostly got content covered, and it should be relatively easy for engineers to implement.

What types of people or industries should use this software?

Anyone designing holographic display for basically any purpose should use this software. We have identified augmented or mixed reality as the first potential target. In our view, for MR specifically to achieve real consumer use, you need to overcome several of the display issues that it currently has.

A massive topic of the conference this week has been on something called the accommodation vergence conflict. This is the effect you get in current generation displays when your eyes are forced to converge on a certain object, but because the information your brain presents to your eyes is fundamentally two dimensional, your depth of focus is not where you are converging your eyes.

Imagine you are always focusing here, but your eyes are being told the object is over there. This is a very confusing sensation and has caused a lot of the nausea, headaches and dislocation from reality people experience when using MR or VR. Holographic technology naturally fixes this because you are presenting the eye with a genuine 3-D image, and so you do not run into the same problems.

The eye reacts as it would a normal object and you do not get the same unfortunate effects. It is an easy solution to make the experience better and to get AR into the consumer sphere.

We also have applications like Heads Up displays for cars. You have probably seen a Heads Up display for cars and aircraft. It is 2-D information read out so your speed or RPM is somehow displayed on the windscreen. Imagine instead we are able to populate the area outside the windscreen with icons, so that you have GPS where the arrows are actually looking like they are in the road or highlighting road signs or hazards in the space around you.

You would look through your windscreen and you would see virtual objects out in the world with you. You can imagine all kinds of uses. Of course the kind of thing that you would want to use it for is navigation - if you have an arrow on your GPS, sometimes it can be kind of difficult to tell which direction it means.

This way the arrow could be hovering directly above the place you wanted to turn. For that you need depth of field - you need the arrow to be out in the world and have some range. Holographic display gives you that.

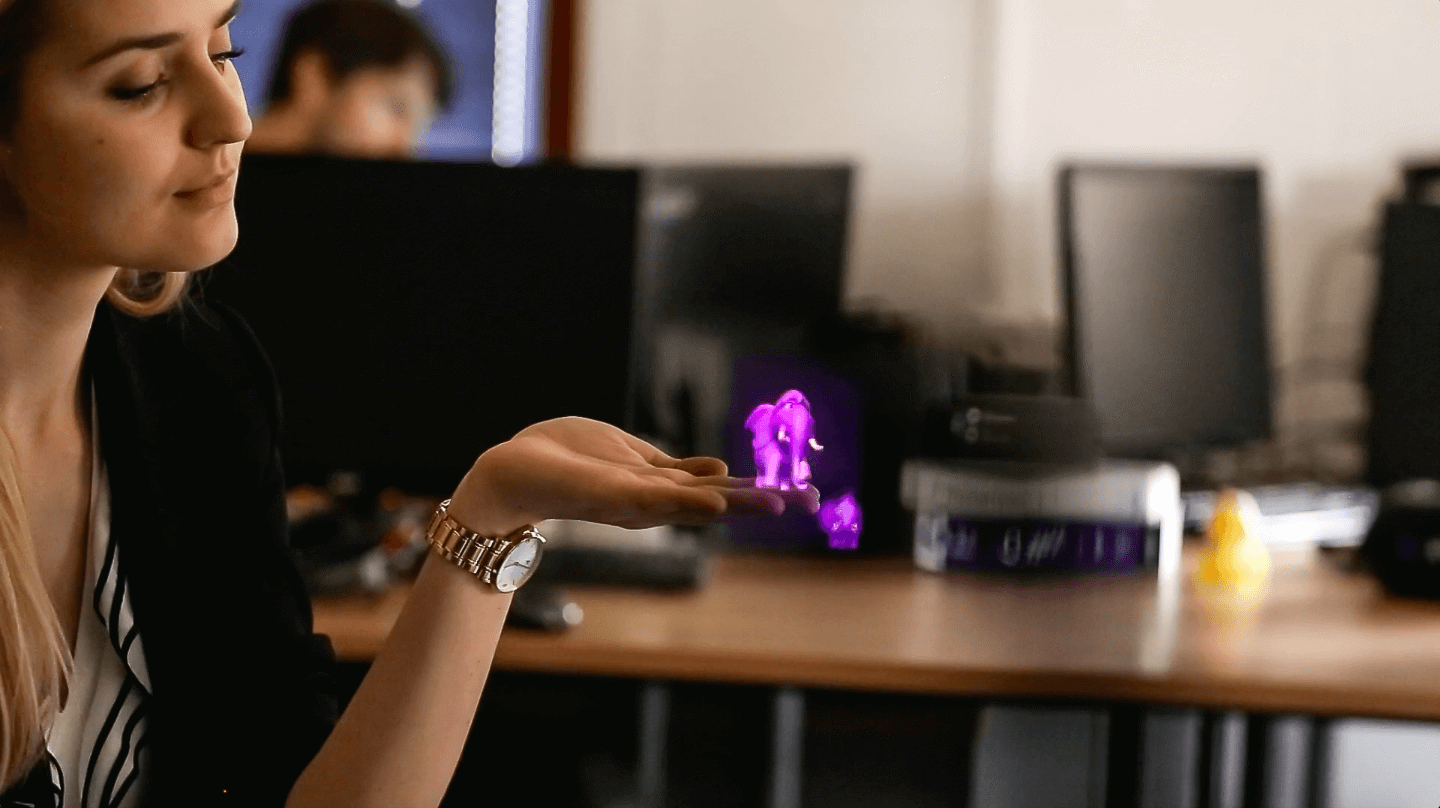

Beyond that, the dream is to eventually have holographic smartphones and tablets. With holographic phones there would be a full 3-D display in your hand. That is a little further off, as that is not currently do-able with the available hardware. However, by getting the AR/VR sector moving, then getting into automotives those kinds of things would follow very soon in the next three to five years.

How do you think VividQ will transform display technology?

I think that holographic display will be the dominant display technology of the next decade. VividQ will be the underlying software powering all of that. It will be in the same way that companies like ARM are basically the supplier to everybody running mobile phone chips and so forth.

You will find our software embedded in all your devices that has a holographic display. Holographic display will be the thing everyone uses, because once you have full 3-D, where you don’t need to wear glasses or any of that stuff - why would you ever have a 2-D screen again?

About Darran Milne

Mathematician and Theoretical Physicist by training - Darran holds a PhD in quantum information theory and quantum optics from the University of St Andrews and the Max Plank Institute. He began his career as an analyst at a large financial software company, leading new development initiatives and managing client implementation projects. As one of the co-founders of VividQ and its former CTO, he directed the original research and development efforts while maintaining partner collaborations and investor relations. As a CEO, he has been responsible for the execution of the company’s technology and commercial strategies, establishing VividQ as the underlying software provider for holographic display.

Disclaimer: The views expressed here are those of the interviewee and do not necessarily represent the views of AZoM.com Limited (T/A) AZoNetwork, the owner and operator of this website. This disclaimer forms part of the Terms and Conditions of use of this website.